AWS Solution Architect Associate Exam Study Notes: S3 (Simple Storage Service), CloudFront and Storage Gateway

These notes were written while working through the A Cloud Guru AWS Certified Solutions Architect - Associate online course. These notes are partly from the videos, and also from various other online sources. Primarily, they’re notes for me, but you might find them useful too.

Since the AWS platform is changing so quickly, it’s possible that some of these notes may be out of date, so please take that into consideration if you are reading them.

Please let me know in the comments below if you have any corrections or updates which you’d like me to add.

This post was last updated in March, 2019.

S3 (Simple Storage Service)

S3 buckets are stored in specific regions, however bucket names must be globally unique.

S3 Supports:

- Versioning

- Encryption

- Static website hosting

- Access logs - server access logging can be used to track requests for access to your bucket, and can be used for internal security and access audits

An S3 object consists of:

- Key

- Value

- Version ID (used when versioning is turned on)

- Metadata (data about the object such as date uploaded)

- Subresources:

- ACLs (Access Control Lists i.e. who can access the file)

- Torrent

Charges

S3 charges for:

- The volume of data you have stored

- The number of requests

- Data transfer out (including to buckets in other zones/regions)

- Transfer Acceleration (which uses the AWS CloudFront CDN for caching files at edge locations)

Storage Class Tiers

S3 has:

- 99.99% availability

- 99.999999999% durability (11 9’s, you won’t lose a file due to S3 failure)

S3 IA (Infrequent Access):

- 99% availability

- 99.999999999% durability (11 9’s, you won’t lose a file due to S3 failure)

- Best for situations where you want lower costs than standard S3, and a file doesn’t need to be always accessable, but it’s critical that the file is not lost.

S3 RRS (Reduced Redundancy Storage) has:

- 99.99% availability

- 99.99% durability (so you may lose a file). This means that RRS is best for situations where you want lower costs than standard S3, and you’re storing non-critical data, or data which can be regenerated in the case where the file is lost.

S3 RRS is not advertised anymore, but may still be mentioned in the exam.

Glacier (which is not actually in the S3 family of services):

- Extremely cheap long term storage for archiving

- Retreival takes 3-5 hours to complete

- Has a 90 day minimum storage duration

- The first 10GB of data retreival per month is free

Note that Glacier is not supported by AWS import/export. In order to use this functionality, you must first restore it into S3 via the S3 Lifecycle Restore feature

Uploading to S3

200 OK is returned after a successful upload.

The minimum file size of an object is 0 bytes.

Multipart upload is supported via the S3 API. It’s recommended to always use multipart uploads for file sizes over 100mb.

S3 has:

- Atomic updates - you’ll never have a situation where a file is partly updated; it’ll either fully succeed (the file will be updated), or fully fail (the file will NOT be updated).

- Read after write consistency of PUTs for new objects; you can read an object immediately after upload.

- Eventual consistency for updates and DELETEs; an object won’t immediately be updated. If you try to access an object immediately after it’s been updated, you may get the old version. It takes a few seconds for an update or delete to propagate.

Amazon S3: Data storage and movement

To build a powerful cloud application you need scalable storage. AWS Simple Storage Services (S3) provides all the needed tools to store and move data around the globe using ‘buckets.’

There are four ways to designate buckets in AWS and the cost to store them varies greatly.

1) Amazon Standard Storage

For data that are frequently accessed, such as logs for the last 24 hours or a media file that is being accessed frequently, Amazon Simple Storage offers affordable, highly available storage capacity that can grow as quickly as your organization needs. You are charged by the gigabyte used and number of requests to access, delete, list, copy or getting a data in S3. Expensive storage arrays are not required to get a new endeavor off the ground.

2) Amazon Infrequent Access Storage

Using the S3 interface, monitor and manage resources that are necessary for your operation but are used far less frequently. By designating these buckets as infrequent access, data availability will be 99.9% (amounting to less than 9 hours of downtime in a year) as compared to 99.99% availability (less than an hour of downtime in a year) for standard storage but can be stored for far less cost per gigabyte than Standard Storage buckets.

3) Amazon Glacier

For deep storage items that must be retained but are rarely used, Amazon Glacier provides long-term archiving solutions. Data stored in Glacier can take hours to retrieve instead of seconds, but the cost is a fraction of standard storage. With redundant data sites all over the world Glacier ensures your archival data is secure and safe no matter what happens.

4) Amazon Reduced Redundancy Storage

This service allows for the storing of non-essential, easily reproducible data, without the same amount of redundancy and durability as their higher-level storage tiers.

Understanding these storage concepts in S3 is essential before building your cloud architecture. You can learn more about the intricacies of S3 here. Now let’s take a look at some of the other AWS services that can be used to build scalable cloud application.

Access

The S3 bucket url format is: s3-region.amazonaws.com/bucketname/path-to-file i.e. https://s3-ap-southeast-2.amazonaws.com/lithiumdream-wpmedia2017/2017/10/8e30689cd04457e1a7b44d590b0edfc1.jpg or https://lithiumdream-wpmedia2017.s3-ap-southeast-2.amazonaws.com/2017/10/8e30689cd04457e1a7b44d590b0edfc1.jpg

If S3 is being used as a static website, the URL format will be: https://s3-website-ap-southeast-2.amazonaws.com/2017/10/8e30689cd04457e1a7b44d590b0edfc1.jpg or https://lithiumdream-wpmedia2017.s3-website-ap-southeast-2.amazonaws.com/2017/10/8e30689cd04457e1a7b44d590b0edfc1.jpg

Note s3-region.amazonaws.com vs s3-website.amazonaws.com

The default for permissions for objects on buckets is private.

In order to access a file via public DNS, you’ll need to make the file publically available.. Not even the root user can access the file via public DNS, without making the file publically available.

With ACLs (Access Control Lists), you can allow Read and/or Write access to both the objects in the bucket and the permissions to the object.

Bucket Policies override any ACLs - if you enable public access via a Bucket Policy, the object will be publically accessable regardless any ACLs which have been set up.

Buckets can be configured to log all requests. Logs can be either written to the bucket containing the accessed objects, or to a different bucket.

OAI (Origin Access Identity) can be used for allowing CloudFront to access objects in an S3 bucket, while preventing the S3 bucket itself from being publically accessable directly. This prevents anybody from bypassing Cloudfront and using the S3 url to get content that you want to restrict access to.

Encryption

Data in transit is encrypted via TLS

Data at rest can be encrypted by:

- Server-side encryption

- SSE-S3 - Amazon S3-Managed Keys where S3 manages the keys, encrypting each object with a unique key using AES-256, and even ecrypts the key itself with a master key which regularly rotates.

- SSE-KMS - AWS KMS-Managed Keys - Similar to SSE-S3, but with an option to provide an audit trail of when your key is used, and by whom, and also the option to create and manage keys yourself.

- SSE-C - Customer-Provided Keys - Where you manage the encryption keys, and AWS manages encryption and decryption as it reads from and writes to disk.

- Client Side Encryption

Storage

S3 objects are stored in buckets, which can be thought of as folders

Buckets are private by default

S3 buckets are suitable for objects or flat files. S3 is NOT suitable for storing an OS or Database (use block storage for this)

There is a maximum of 100 buckets per account by default.

S3 objects are stored, and sorted by name in lexographical order, which means that there can be peformance bottlenecks is you have a large number of objects in your S3 bucket which have similar names.

For S3 buckets with a large number of files, it’s recommended that you add a salt to the beginning of each file, to help avoid performance bottlenecks, and ensure that files are evenly distributed thoughout the datacenter.

S3 objects are stored in multiple facilities; S3 is designed to sustain the loss of 2 facilities concurrently.

There is unlimited storage available with support for objects with sizes from 0 bytes to 5TB.

Versioning

On buckets which have had versioning enabled - versioning can only be disabled, but not removed. If you want to get rid of versioning, you’ll need to copy files to a new bucket which has never had versioning enabled, and update any references pointing to the old bucket to point to the new bucket instead.

If you enable versioning on an existing bucket, versioning will not be applied to existing objects; versioning will only apply to any new or updated objects.

Cross-region replication requires that versioning is enabled.

Side note: Dropbox uses S3 versioning.

Lifecycle management

There is tiered storage available, and you can use lifecycle management to transition though the tiers. For example, there might be a requirement that invoices for the last 24 months are immediately available, and that older invoices don’t need to be immediately available, but must be stored for compliance reasons for 7 years. For this scenario, you may decide to keep the invoices younger than 24 months in S3 for immediate access, and use lifecycle management to move the invoices to Glacier (where storage is extremely cheap, with the tradeoff that it takes 3-5 hours to restore an object) for long term storage.

Lifecycle management also supports permanently deleting files after a configurable amount of time i.e. after the file has been migrated to Glacier.

Cloudfront

Cloudfront is AWS’s CDN.

Cloudfront supports Perfect Forward Secrecy.

Edge Locations

Edge locations are separate from and different to AWS AZ’s and Regions

Edge locations support:

- Reads

- Writes - enabling writes means that customers can upload files to their local edge location, which can speed up data transfer for them

Origin

The origin is the name of the source location

The origin can be:

- A S3 bucket

- An EC2 Instance

- An ELB

- Route53

- Outside of AWS

Distribution

A “distribution” is the collection of edge locations in the CDN

There are two different types of distribution:

- Web distribution - used for websites

- RTMP distribution - used for streaming content (i.e. video), and only supported if the origin is S3 - other origins such as EC2, etc do not support RTMP

It’s possible to clear cached items. If the cache isn’t cleared, all items live for their configured TTL (Time To Live)

Read access can be restricted via pre-signed urls and cookies. i.e. to ensure only certain customers can access certain objects.

Geo restrictions can be created for whitelists/blacklists

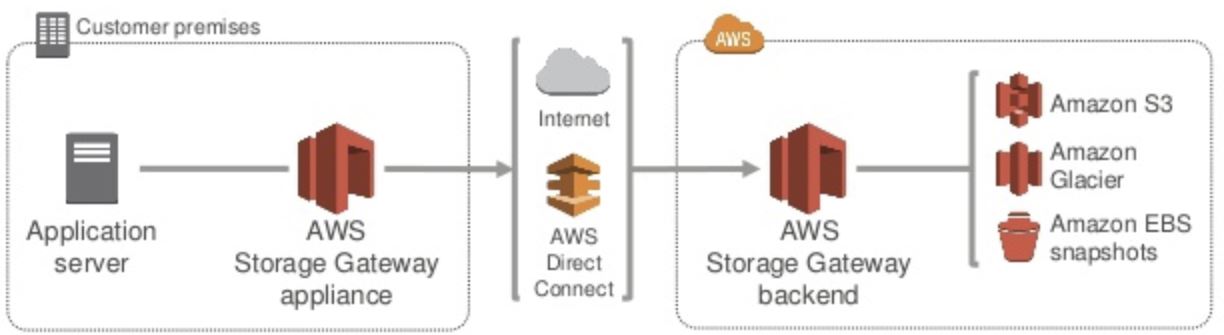

Storage Gateway

A Storage Gateway is a software appliance which sits in your data center, and securely connects your on-premise

There are four types of Storage Gateway:

- File Gateway - using NFS to store files in S3

- Volume Gateway - a virtual iSCSI disk. Block based, not object based like S3.

- Cached volumes - the entire data set is stored on the cloud, with recently-read data on site, for quick retrieval of frequently accessed data.

- Stored volumes - similar to Volume Gateway, but the entire data-set is stored on-premise with data being incrementally backed up to S3

- Tape Gateway - virtual tapes, backed up to Glacier. Used by popular backup applications such as NetBackup

All data transferred between Storage Gateway and S3 is encrypted using SSL. By default, all data stored in S3 is encrypted server-side with SSE-S3, so your data is automatically encrypted at rest.

S3 IS OBJECT STORAGE

Simple web service interface

Use Cases:

- backup and archive for on premise or cloud data

- content media and software storage and distribution

- big data analytics

- static website hosting

- cloud native mobile and internet application hosting

- disaster recover

manage data through lifecycle policies

S3 BASICS

Buickets must be unique across all AWS accounts like DNS

Best practice is name buckets with domain name.

namespace is global but buckets are created in a specific region

- minimize latency

- satisfy data locality and sovereignty concerns

- disaster recover and compliance

object size from 0 bytes to 5TB

unlimited amount of data

objects contain data and metadata

- system metadata is used by S3 and contains date last modified, object size, md5 digest

- user metadata is optional with use of tags

object identified by unique identifier called a key

keys are 1024 bytes UTF-8 and make contain slashes dots and dashes

example:

httpL//mybucket.s3-amazonaws.com/files/mydoc.txt

- mybucket is bucket name

- files/mydoc.txt is key

S3 OPERATIONS

- Create/delete a bucket

- Write an object

- Read an object

- Delete an object

- List Keys in a bucket

REST Interface maps to HTTP verbs

HTTPS for secure requests

Durability and Availability

- durability “Will my data still be there?” 99.999999999%

- availability “Can I access my data now?” 99.99%

durability is achieved by storing data across multiple devises in multiple facilities within a region

Best practice is to protect data from user error using features as versioning, cross region replication and MFA delete

Data is ‘Eventually Consistent’

May take time to propagate. Puts to existing objects or deletes may see old file on subsequent GETs

S3 secure by default. Only person creating bucket as access

Access through

- coarse grained using access control lists

- fine grained using bucket policies, IAM policies, query-string authentication

course grained – Read, Write, Full-Control

Bucket Policies are recommended

Static Website Hosting

- Create bucket with same name as website hostname

- upload static files to the bucket

- make all files public

- enable static website hosting for the bucket including an Index and Error doc

- Site available at <bucket>.s3-website-<awsregion>.amazonaws.com

- create friendly DNS name in your own domain and use DNS cname or Route53 alias

- Website now available

S3 ADVANCED FEATURES

PREFIXES AND DELIMITERS

flat structure in a bucket

prefixes and delimiters to emulate a file and folder hierarchy

STORAGE CLASSES

S3 Standard

- high durability 99.999999999%

- high availability 99.99%

- low latency

- high performance

- low first byte latency

- high throughput

S3 Standard Infrequent Access (IA)

- high durability 99.999999999%

- low latency 99.9%

- high throughput

- log lived less frequently accessed

- lower price per GB

- minimum object size 128kb

- minimum duration 30 days

S3 Reduced Redundancy Storage (RRS)

- lower durability 99.99%

- derived data that can be reproduced

Amazon Glacier

- secure

- durable

- extremely low cost

- no real time access

- restore 3-5 hours later

- retrieve 5% each month free

- Amazon Glacier as a storage class. Data can only be retrieved by S3 APIs. Client can set a data retrieval policy to prevent cost overruns

OBJECT LIFECYCLE MANAGEMENT

- mange files over the life of the document

- Lifecycle configs are attached to a bucket and can be applied to all objects or specified by a prefix

ENCRYPTION

- all sensitive data should be encrypted in flight and at rest

- in flight can use S3 SSL endpoints (HTTPS)

- at rest use server side encryption (SSE)

AWS Key Management Service

- uses 256-bit advanced encryption standard (AES)

- can encrypt on client side using Client Side encryption

SSE-S3 (AWS Manage Keys)

- fully integrated “check-box-style”

- AWS handles key management and key protection for S3

- Every object is encrypted with unique key

- further encrypted by a separate master key

- new master keys issued at least monthly with rotating keys

- keys stored on secure hosts

SSE-KMS (AWS KMS Keys)

- full integrated where Amazon handles key management and protection for S3 but client manages keys

- separate permissions for using master keys

- provides auditing – who used key to access chick object and when

- can view failed attempts

SSE-C (Customer Provided Keys)

- Client maintains encryption keys but do not want to manage client side encryption library

- AWS will do encryption/decryption of objects while client maintains keys

Client Side Encryption

- encrypt data on client side before sending to AWS S3

- use AWS KMS manage customer master key or use client side master key

VERSIONING

- Protects data from accidental or malicious deletion

- once enabled, versioning cannot be removed from a bucket, only suspended

MFA DELETE

- On top of versioning, another layer of protection from deletion

- only enabled by Root

PRE-SIGNED URLS

- S3 objects default are private but can be shared with pre-signed URLs

- must provide :

- security credentials

- bucket name

- object key

- HTTP method

- expiration data and time

MULTIPART UPLOAD

- support uploading or copying large objects

- use multipart upload api

- 3 step process

- initiation

- uploading of the parts

- completion

- arbitrary order

- should use > 100Mb

- must use > 5GB

- AWS cli – multipart upload is automatic

- can set lifecycle policy on a bucket to abort in complete multipart uploads after a specified number of days

RANGE GET

- can download a portion of an object in S3 or Glacier

CROSS REGION REPLICATION

- asynchronously replicate all new objects in source bucket in one region to target bucket in another region.

- only new objects, old objects must be copied manually

- versioning must be turned on for both regions

- IAM policy to give S3 permission

LOGGING

- track requests to S3

- off by default

EVENT NOTIFICATIONS

- Sent in response to actions taken on an object uploaded or stored in S3

- enables client to run workflows, send alerts, or perform actions

- set at bucket level

AMAZON GLACIER

- unlimited

- large tar

- 99.999999999% durability

Archives

- contain up to 40TB

- unlimited number of archives

- unique ID

- automatically encrypted

- immutable

Vaults

- containers for archive

- each account up to 1000 vaults

- IAM or vault policies for access

Vault Locks

- vault lock policy for Write Once Read Many

- once locked, policy cannot be changed

Data Retrieval

- 5% free each month

- 3-5 hours later

Source

https://www.mattbutton.com/2017/10/08/aws-solution-architect-associate-exam-study-notes-s3-simple-storage-service-cloudfront-and-storage-gateway/

https://www.kecklers.com/amazon-s3-notes/

https://docs.aws.amazon.com/AmazonS3/latest/gsg/s3-gsg.pdf

https://iaasacademy.com/aws-certified-solutions-architect-associate-exam/amazon-s3-certified-solution-architect-exam-key-notes/

https://searchaws.techtarget.com/quiz/Test-your-knowledge-Amazon-Simple-Storage-Service-quiz

AWS Classes Call 777337279

Email ITClassSL@gmail.com

Individual and Group Classes [English / Sinhala / Tamil]

No comments:

Post a Comment